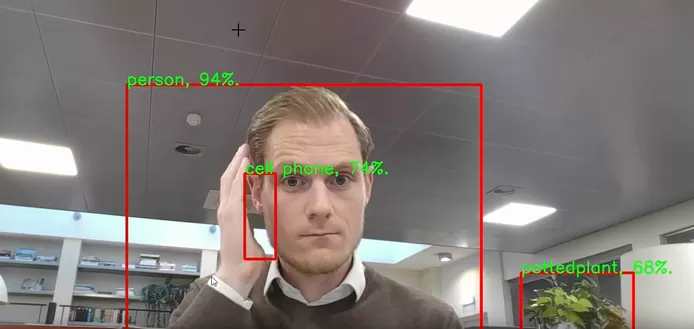

A Dutch man was fined 380 euros ($400) after an AI-powered camera caught him talking on his phone while driving. Only he claims he was only scratching his head and the system made a mistake.

In November of last year, Tim Hansen received a fine for allegedly speaking on his mobile phone while driving a month earlier. He was shocked, mainly because he didn’t remember using his phone at the wheel on that particular day, so he decided to check the incriminating photo on the Central Judicial Collection Agency. At first glance, it seems that Tim is indeed talking on his phone, but a closer look reveals that he isn’t actually holding anything in his hand. He was simply scratching the side of his head and the camera mistook the position of his hand for it holding a phone. What is even more baffling is that the human who checked the photo and validated his fine didn’t spot the “false positive” either.

Photo: Monocam

Hansen, who happens to work in IT, creating algorithms that edit and analyze images, used his personal experience to explain how the police camera system, Monocam, works, and why it can make mistakes. Even though he couldn’t test Monocam himself, he explained how the system is designed to work and why it can produce false positives.

“If a model has to predict whether something is ‘yes’ or ‘not’ the case, it can of course also happen that the model is wrong,” Tim wrote. “In the case of my ticket, the model indicated that I am holding a telephone, while that is not the case. Then we speak of a false positive. A perfect model only predicts true positives and true negatives, but 100% correct prediction is rare.”

The IT specialist explained that systems like Monocam have to be trained on a large set of images divided into two or three groups: a training set, a validation set and a test set. The first set is used to teach the algorithm which objects are on which images and which properties (colors, lines, etc.) belong to them, the second, to optimize a number of hyper-parameters of the algorithm, and the third to test how well the system actually works.

Photo: Tim Hansen

“The algorithm we used, and that of the police, may suspect that a telephone is present because the training dataset contains many examples of people calling with a telephone in their hand next to their ear,” Tim said. “It may well be that the training dataset contains few or no photos of people sitting with an empty hand on their ear. In that case, it becomes less important for the algorithm whether a phone is actually held in the hand, but it is sufficient if the hand is close to the ear. To improve this, more photos should be added where the hand is empty.”

Hansen claims that due to the many variables that can impact the decision of an algorithm, a human filter is needed to minimize the number of false positives. Only in his case, the fine was confirmed by a human after analyzing the photo captured by the camera. so that isn’t a foolproof solution either.

The Dutch driver has contested the fine and expects a positive outcome, but he will now have to wait up to 26 weeks for an official verdict. His case went viral in the Netherlands and neighboring countries like Belgium, where some institutions are asking for the installation of cameras capable of detecting the use of mobile phones while driving, but Tims’s story proves that they are far from 100% reliable.